Consuming Data From Coin Cap REST Endpoints and Websockets with Java

May 24, 20241. Introduction

Consuming Data from APIs and WebSockets with Java and Kafka

In the modern world of data-driven applications, the ability to efficiently consume data from various sources and process it is crucial. Technologies like Apache Kafka and Java have emerged as leading tools for handling such tasks due to their robustness, scalability, and reliability.

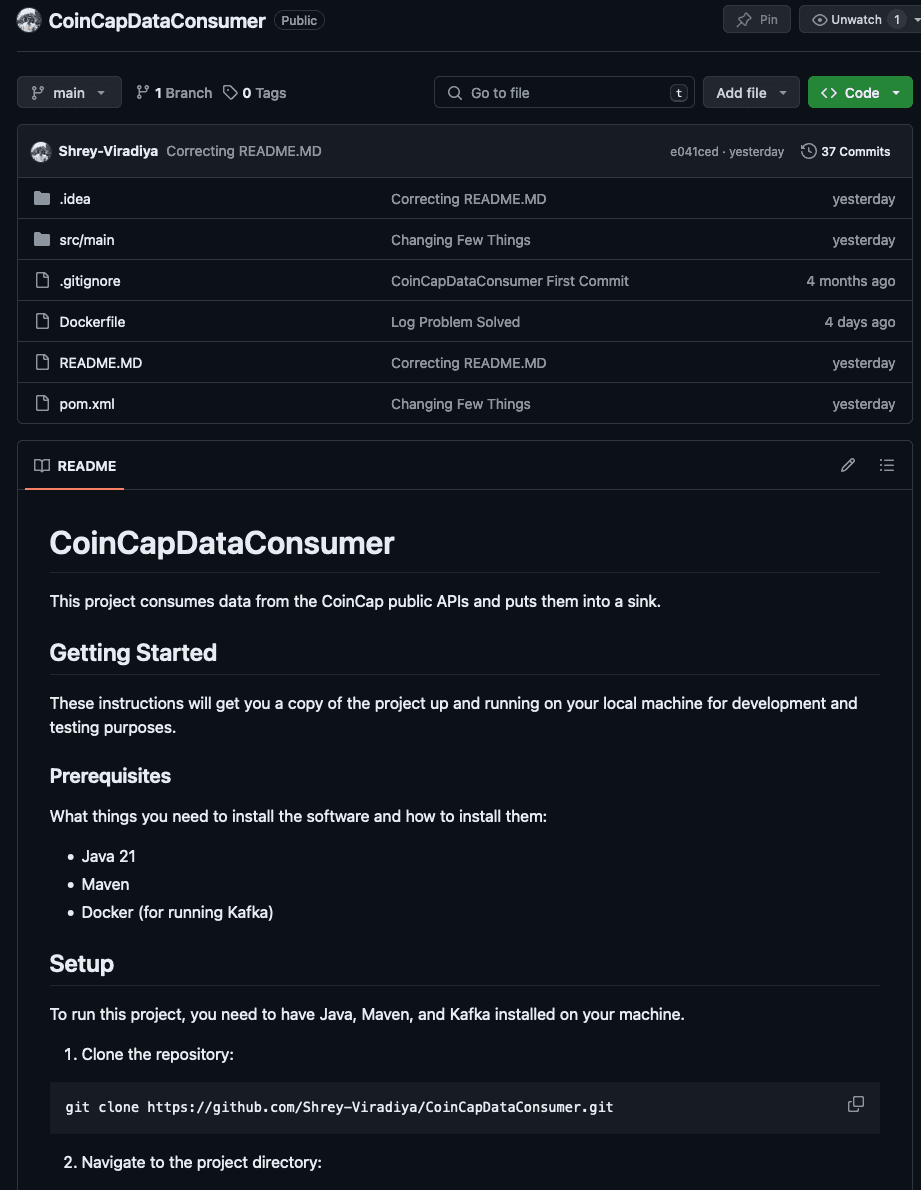

In this blog post, we will explore a practical application of these technologies through a project called CoinCapDataConsumer. This project, developed in Java and managed with Maven, consumes data from the CoinCap public APIs and WebSockets and puts it into a Kafka sink.

We will walk through the setup of the development environment, delve into the project structure and code, and discuss how to run the project. By the end of this post, you will have a solid understanding of how to build a data-consuming application using Java, Kafka, and Docker.

Get started with starring the forking the repository CoinCapDataConsumer

2. Technologies Used

The revised list of technologies used in this project, after removing YAML, Jackson, and Apache Commons, is:

- Java: The main programming language used to develop the application.

- Maven: A build automation tool used primarily for Java projects.

- Kafka: A distributed streaming platform used for building real-time data pipelines and streaming apps.

- Docker: A platform to develop, ship, and run applications inside containers.

- WebSocket: A communication protocol providing full-duplex communication channels over a single TCP connection.

- REST API: A set of rules that allow programs to talk to each other. The developer creates the API on the server and allows the client to talk to it.

- Spotless: A code formatting plugin used in the project to ensure code consistency.

- Log4j: A reliable, fast, and flexible logging framework (APIs) written in Java.

3. Understanding the Project Structure

The CoinCapDataConsumer project is structured in a way that follows standard Java project conventions. Here’s a brief overview of the key components:

-

src/main/java/org/sv/data/App.java: This is the main entry point of the application. It contains themainmethod which starts the application. TheAppclass is responsible for reading the configuration file, initializing the Kafka producer, and starting the data consumers. -

src/main/java/org/sv/data/consumers: This package contains the classes responsible for consuming data from the CoinCap APIs and WebSockets. TheRESTDataConsumerandWebSocketDataConsumerclasses implement the logic for consuming data from REST APIs and WebSockets, respectively. -

src/main/java/org/sv/data/config: This package contains theConfigObjectclass, which is used to hold the configuration data read from the YAML file. -

src/main/java/org/sv/data/dto: This package contains the Data Transfer Object (DTO) classes. These classes are used to hold the data consumed from the CoinCap APIs and WebSockets. -

src/main/java/org/sv/data/handler: This package contains the classes responsible for handling the data consumed from the CoinCap APIs and WebSockets. TheDataStoringHandlerandSimpleDataHandlerclasses implement the logic for handling the consumed data. -

src/main/java/org/sv/data/kafka: This package contains theKafkaSinkProducerclass, which is responsible for producing messages to the Kafka sink. -

src/main/java/org/sv/data/socketendpoints: This package contains theKafkaProducingWebSocketEndPointclass, which is used as the endpoint for the WebSocket connections. -

pom.xml: This is the Maven Project Object Model (POM) file. It contains information about the project and configuration details used by Maven to build the project. -

README.md: This file provides information about the project, including how to set up the development environment and run the project.

By understanding the structure of the project and the purpose of each component, you can better understand how the CoinCapDataConsumer application works.

4. Running the Project

To run the CoinCapDataConsumer project, you would typically follow these steps:

- Build the Project:

Since this is a Maven project, you can use the mvn clean install command in the terminal to build the project. This command cleans the target directory, compiles your code, runs any tests, and installs the packaged version of your project into your local repository.

- Run the Application:

After building the project, you can run the application. The main class is org.sv.data.App. You can run it directly from your IDE (IntelliJ IDEA) or from the terminal using the java -cp target/myproject-1.0-SNAPSHOT.jar org.sv.data.App command. Replace myproject-1.0-SNAPSHOT.jar with the actual name of your jar file.

Please note that you need to provide the path to the configuration file as an argument when running the App class. The configuration file is read in the main method of the App class:

ConfigObject applicationConfiguration = readConfigFromFile(args[0]);So, when running the application, you need to provide the path to the configuration file. For example, if you’re running the application from the terminal, the command might look like this:

java -cp target/myproject-1.0-SNAPSHOT.jar org.sv.data.App /path/to/config.yamlReplace /path/to/config.yaml with the actual path to your configuration file.

- Check the Logs: The application uses Log4j for logging. You can check the logs to see if the application is running as expected.

Remember to have your Kafka and Docker running as the application requires these services. I have used the bitnami kafka docker image to use kafka: Bitnami Image

5. Conclusion

Throughout this post, we’ve explored the CoinCapDataConsumer project, a Java application that consumes data from APIs and WebSockets and puts it into a Kafka sink. We’ve delved into the project’s structure, understanding the role of each component, and walked through the process of running the project.

We’ve seen how technologies like Java, Maven, Kafka, Docker, WebSocket, REST API, Spotless, Guava, and Log4j can be combined to create a robust and efficient data-consuming application. This project serves as a practical example of how these technologies can be used in real-world scenarios.

Whether you’re a beginner looking to learn more about data consumption or a seasoned developer interested in expanding your toolkit, we hope this post has provided valuable insights. As always, the best way to learn is by doing. So, don’t hesitate to clone the project, play around with the code, and experiment with different scenarios.

Remember, the journey of learning and improving as a developer is continuous. Keep exploring, keep learning, and keep coding!